|

<< Click to Display Table of Contents >> Decision Tree Multi-classifier |

|

|

<< Click to Display Table of Contents >> Decision Tree Multi-classifier |

|

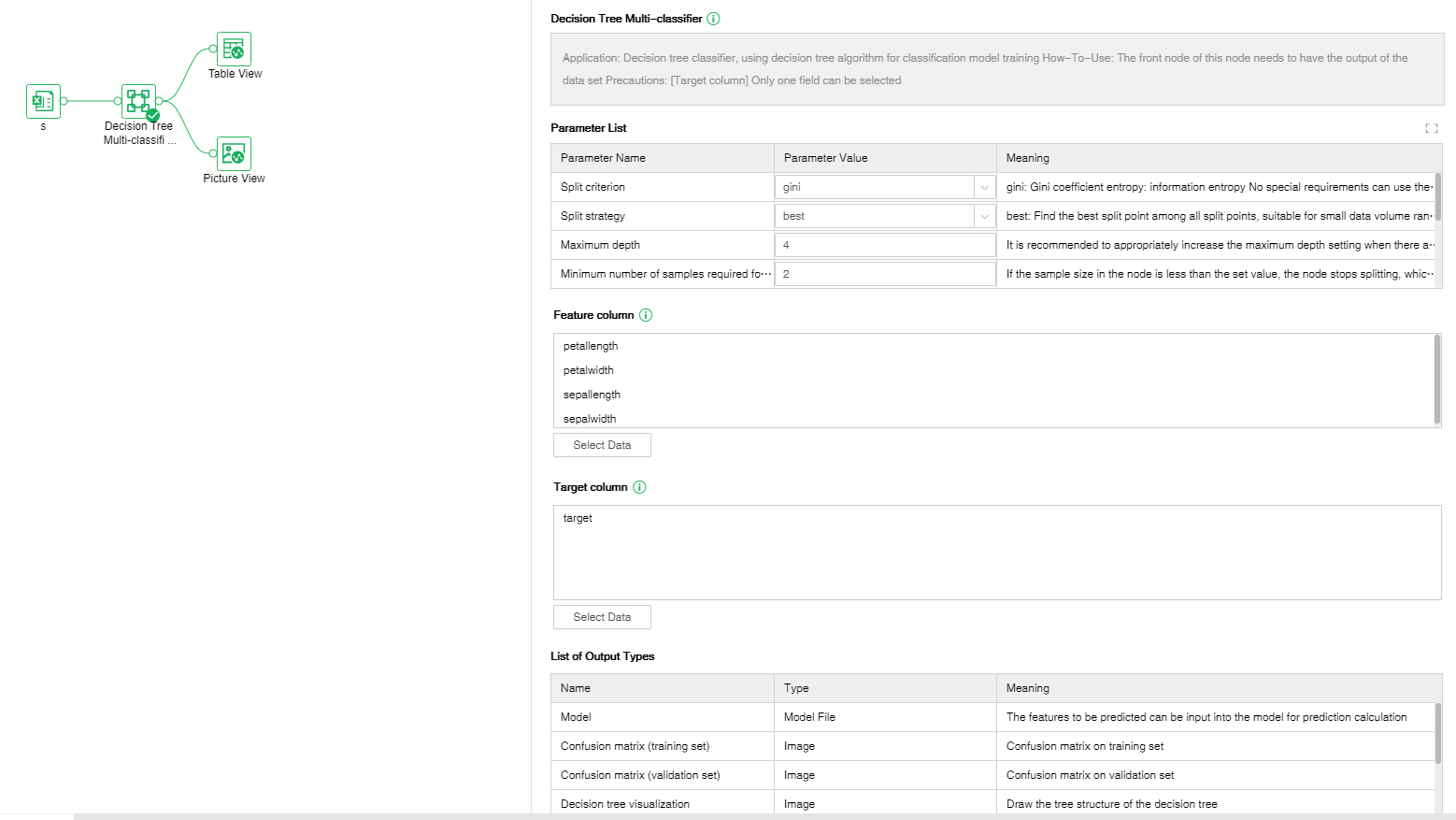

Decision tree classifier, using decision tree algorithm for classification model training.

How-To-Use:

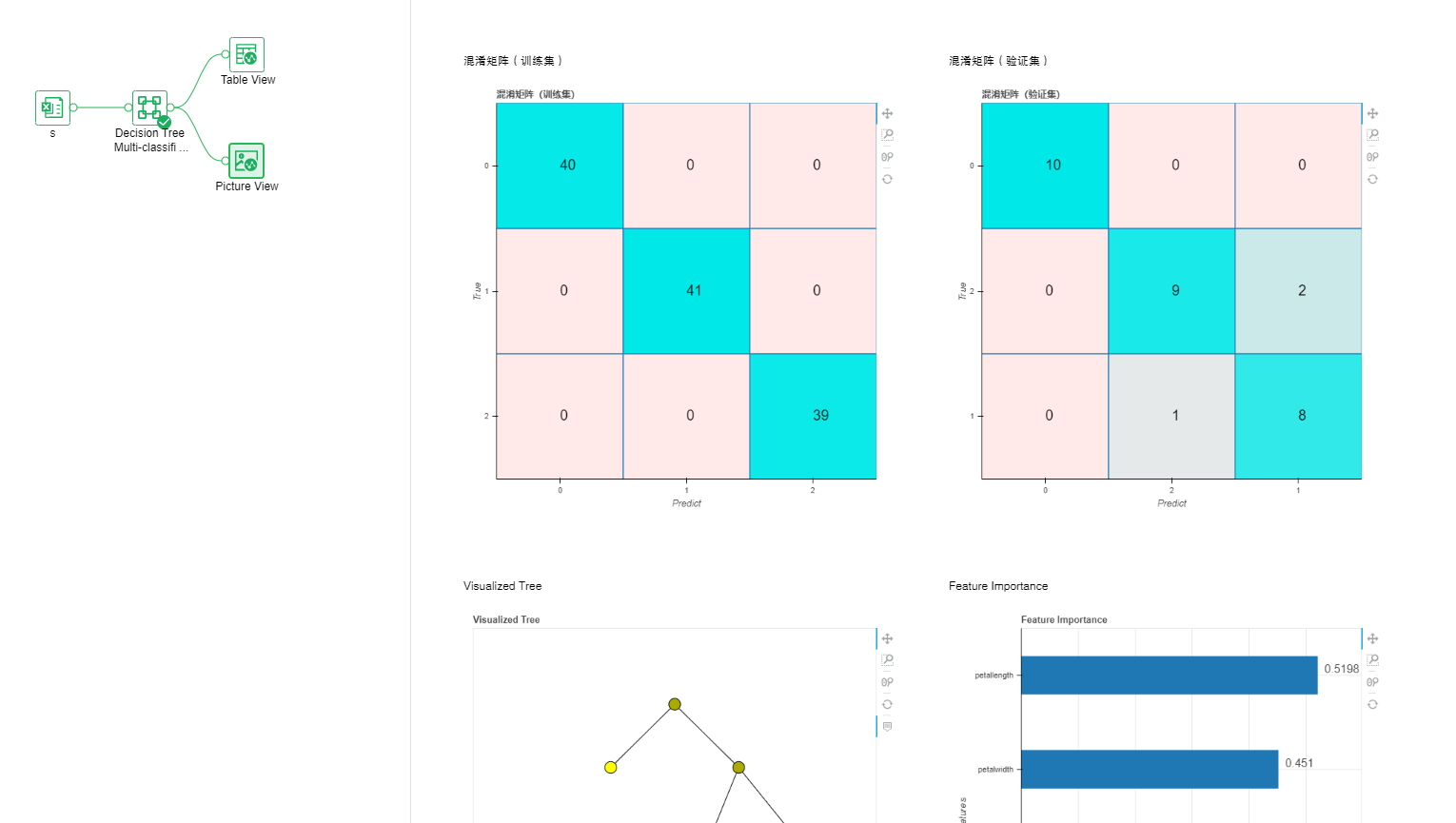

This node receives input from the data set node. After setting the multi-classifier decision tree, you can view the performance indicators by connecting the table view; connecting the picture view to view the ROC curve.

Precautions:

[Target column] Only one field can be selected.

❖Configuration

After adding the Decision tree Multi-classifier node to the experiment, you can set the Decision tree Multi-classifier node through the "Configuration" page on the right.

[Split criterion] There are two types: gini: Gini coefficient; entropy: information entropy.

[Split strategy] best: find the optimal split point among all split points, suitable for small data volume; random: find the local optimal split point in some split points randomly, suitable for large data volume.

[Maximum depth] Set the maximum depth for decision-making. When set to 0, the maximum depth limit is disabled.

[Minimum numbers of samples required for split] If the sample size in the node is less than the set value, the node stops splitting.

[Minimum sample number of leaf nodes] If the sample size of any node among the child nodes of the current node is less than the set value, all child nodes are pruned.

[The minimum sample weight sum of leaf nodes] The sum of all sample weights of the child nodes of the current node is less than the set value, then all child nodes are pruned.

[Maximum number of features] auto: maximum number of features = sqrt (total number of features); sqrt: maximum number of features = sqrt (total number of features); log2: maximum number of features = log2 (total number of features); None: Maximum number of features = total number of features.

[Random Seed] When set to 0, random seed is disabled.

[Largest leaf node] Limit the total number of leaf nodes of the entire tree. When set to 0, the maximum number of leaf nodes is disabled.

[Minimum impurity reduction] If the impurity reduction value of the current node after splitting is less than the set value, all child nodes will be pruned.

[Category weight] balanced: automatically balance samples; None: do not perform sample balance.

[Positive label] Please fill in None or the positive example label (integer or text type) in the target column. When filling in None, the default 1 is a positive example, and there are only (0,1) or (-1,1) in the target column .

[Proportion of training set] Usually, the proportion of the training set in the entire data set is 0.8, and the rest is used as the verification set.

[Parallel coordinate graph sub-cylinder number] Set the number of bins for each feature on the parallel coordinate map. When there are many features, it is recommended to appropriately reduce the number of bins. The value range is an integer greater than or equal to 2.

[Feature column] Select the feature column of the front node, which can be multiple columns.

[Target column] Select the target column of the pre-node, it can only be 1 column, data with classification attributes.

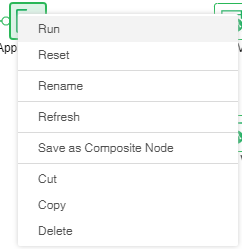

Right-click menu of decision tree multi-classifier:

❖Run Decision Tree Multi-classifier Node

Run the node, pass the data to DM-Engine for calculation, and get the output result.

❖Reset Decision Tree Multi-classifier Node

The node that has been running is reset, the returned result is deleted, and the node status is changed to not running.

❖Rename Decision Tree Multi-classifier Node

In the right-click menu of the Decision Tree Multi-classifier node, select "Rename" to rename the node.

❖Refresh Decision Tree Multi-classifier Node

In the right-click menu of the Decision Tree Multi-classifier node, select "Refresh" to update the synchronization data or parameter information.

❖Save as Composite Node

In the right-click menu of the Decision Tree Multi-classifier node, select "Save as Composite Node",The selected node can be saved as a composite node to realize a multiplexing node, and the parameters of the saved node are consistent with the original node.

❖Cut Decision Tree Multi-classifier Node

In the right-click menu of the Decision Tree Multi-classifier node, select "Cut" to realize node cutting operation.

❖Copy Decision Tree Multi-classifier Node

In the right-click menu of the Decision Tree Multi-classifier node, select "Copy" to realize node replication operation.

❖Delete Decision Tree Multi-classifier Node

In the right-click menu of a Decision Tree Multi-classifier node, select "Delete" or click the delete key on the keyboard to delete the node and its input and output connections.