|

<< Click to Display Table of Contents >> Map Side Join |

|

|

<< Click to Display Table of Contents >> Map Side Join |

|

In the distributed system, when star data (one big table and several small tables) needing join, the data in the small tables can be copied to every Map node to execute Map Side Join, not having to carry out join operation on Reduce node, so as to improve the efficiency of table join.

Hadoop uses DistributedCache to realize Map side join. It can distribute small files to each node, and import small files in memory to use during join. Based on Hadoop, Spark uses Broadcast to realize Map side join. The efficiency of Broadcast file distribution is obviously better than DistributedCache, because it adopts the optimized file broadcast algorithm, including P2P algorithm.

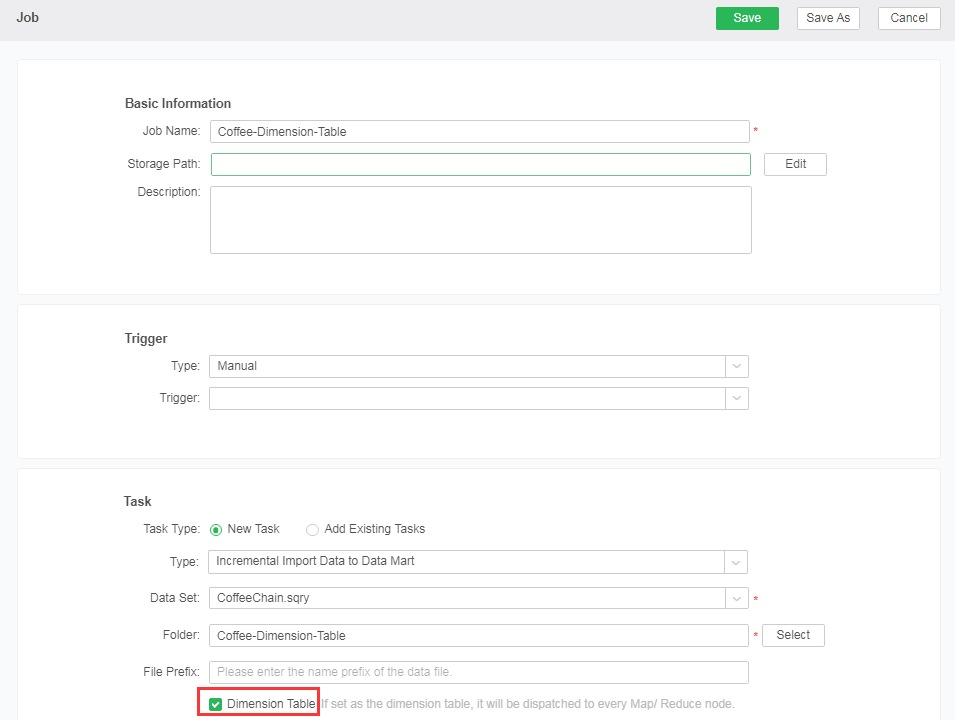

In MPP mart, we can define the dimension table, i.e., the small table in join operation. When users import dimension tables, the system distributes the dimension table to each node, as shown in the image below:

When adding a new node, the current dimension table needs to be distributed to this node.

When executing the data mart data set, the conditions of proceeding Map Side Join are as follows:

(1) Composite Data Set, self-service data set or SQL data set of Yonghong.

(2) In Join operation, it must conform with star data, and the small table is dimension table (It requires that in Join operation, in all tables, only one table is non-dimension table)

While Naming node deploys Map Task, it assigns tasks to the nodes with dimension table as the priority, then sends the needed information of dimension table to the corresponding Map node. If there is no corresponding information of dimension table for Map node, it will request Naming node to get dimension table to save at the local.

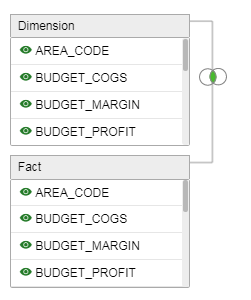

Composite data set and self-service data set use join operator (inner join, left join, right join and external join) to realize Join, the composite data set is shown in the image as below:

(3) In version 8.5.1, the Mapsidejoin is further optimized. On the basis of satisfying the dimension fact table (large table join small table), the function of partitioning into the market is added to enable the Mapsidejoin between the two large tables to improve the computational execution efficiency of the join.

Note: Mapsidejoin using fragmented columns must meet the following requirements:

1. To use the fragmentation column function, we need to first integrate the sub-data of the combined data set into the market: through the way of new scheduling task-incremental import into the market, select the fragmentation column attributes, and select the join column when joining for fragmentation.

2. The two sub-datasets participating in the join need to ensure that the join columns selected in the join conditions are partitioned, and the partitioned columns checked in the market are used as join conditions.

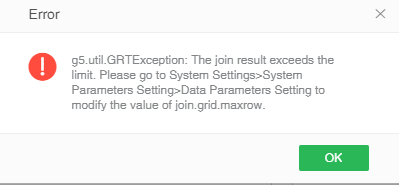

In the 8.5 version, on the Home -> Management System -> System Settings page, add the parameters in the System Parameter Setting -> Data Parameters Setting area: join.grid.maxrow as shown below

Through the parameter control, the product limits the result set of the join to avoid the jam caused by the result set of the join. When the result set of the join exceeds the parameter limit, the warning message is pushed and the join is stopped.

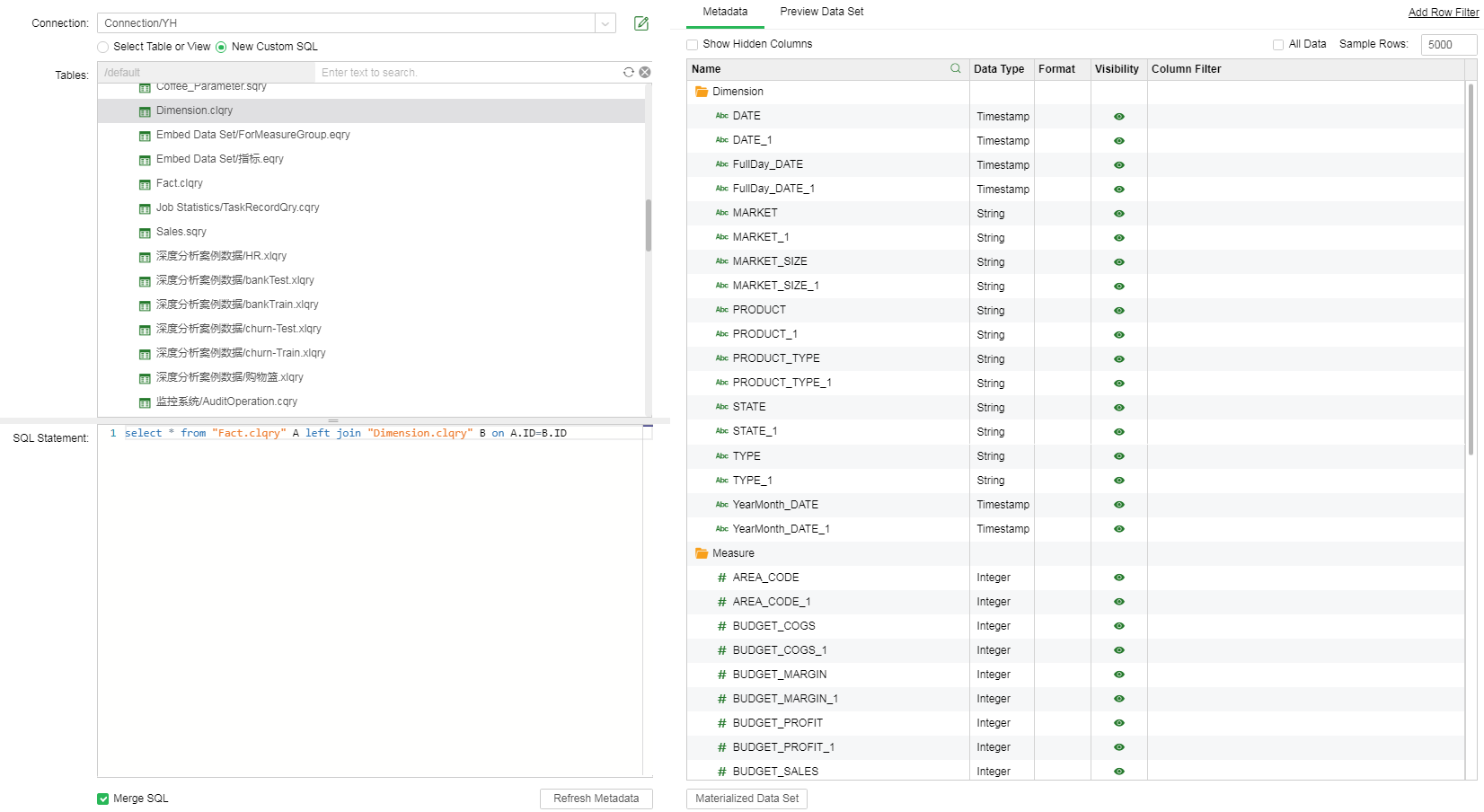

The realization of MapSideJoin by Yonghong SQL data set needs to create SQL data set of Yonghong. Entering the following SQL statement in data set,we can realize the join of fact table and dimension table, as shown in the image below: